Google has recently announced Agent2Agent (A2A), a new open protocol for AI systems. What exactly is A2A, and why is it significant for the AI industry? Furthermore, how does it relate to MCP—does it compete with or complement it?

What Are AI Agents and Agentic AI? §

Before diving deep, let’s quickly demystify the terms for those who may not be familiar.

AI agents are software programs powered by large language models or other AI techniques, designed to act autonomously or semi-autonomously to perform specific tasks. Unlike traditional software scripts that follow rigid instructions, agents are more goal-directed — they interpret intent, make decisions, access tools or APIs, and even communicate with other agents or systems to accomplish their objectives.

Agentic AI refers to this class of AI systems that exhibit characteristics like planning, tool use, memory, and collaboration. It’s the difference between a chatbot that just answers questions and a system that can, say, book a flight by comparing options, checking your calendar, and informing your manager — all by coordinating various subtasks across internal and external services.

This shift from passive assistants to proactive digital collaborators is what makes agentic AI such a transformative leap in enterprise software.

The Need for a Common Language in Agentic AI §

AI agents are becoming practical co-workers in our digital workplaces. But as these agentic AI systems proliferate, a new challenge emerges: how will all these agents talk to each other? In a single company you might have one AI assistant for customer support, another analyzing financial data, and yet another managing IT workflows. If each agent lives in a silo, we miss out on huge productivity gains. Agents need a shared language to collaborate across applications and data silos. In other words, interoperability isn’t just a “nice-to-have” – it’s critical for scaling autonomy and avoiding a tangle of one-off integrations.

To draw an analogy, imagine early computers before networking: powerful on their own, but isolated. It took the Internet’s common protocols (like HTTP and TCP/IP) to unlock collaborative potential among computers. Similarly, AI agents today need their own “internet of agents”. Each agent might be built by different vendors or frameworks, yet to work together they must speak a common protocol. Without open standards, we risk an “agent Babel” where valuable AI workers can’t understand each other. This is why industry leaders are racing to define protocols for agent interoperability; for a future where AI agents seamlessly collaborate regardless of origin.

Understanding the Model-Context-Protocol (MCP) Paradigm §

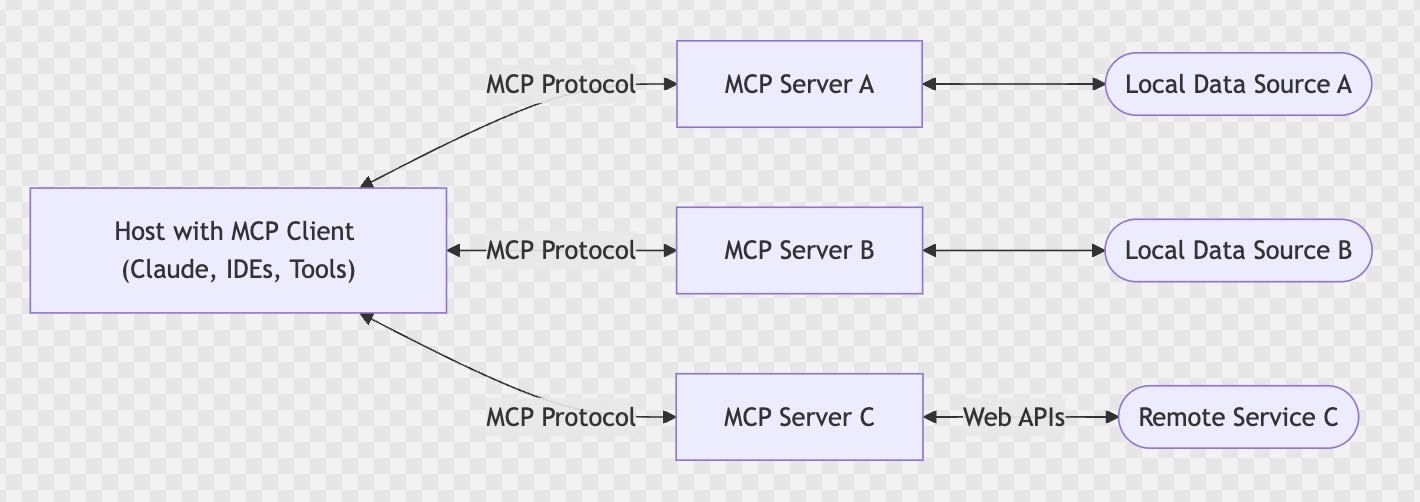

One pillar of this vision was laid by Anthropic with the Model Context Protocol (MCP). Think of MCP like a “USB-C port” for AI applications – a standardized plug-and-play interface connecting AI models to tools and data. In technical terms, MCP is an open standard that defines how external context (databases, APIs, file systems, etc.) is provided to language models or AI assistants. It creates a secure two-way channel between an AI model and its operating context — be it company data, third-party services, or user-specific information.

In the MCP paradigm, an AI agent isn’t an island; it’s a hub that can attach various tools and knowledge sources as context. For example, using MCP, a chatbot could query a CRM database or execute code via a standardized interface, regardless of who built that database connector. Anthropic’s vision was that by standardizing these interfaces, any large language model (LLM) can access a broad ecosystem of tools in a uniform way. This dramatically simplifies building complex agents: you can “plug in” new capabilities (like a Salesforce connector or a web browser tool) just as easily as plugging a new device into a USB-C port.

Crucially, MCP focuses on how an AI model uses tools and data – essentially the Model ↔ Context connection. What it doesn’t fully address is how multiple autonomous agents coordinate with each other. That’s where Google’s new protocol comes in, picking up the baton for the Protocol part of the Model-Context-Protocol triad.

Google’s Agent-to-Agent (A2A) Protocol: HTTP for AI Agents §

Enter Agent-to-Agent (A2A), Google’s newly released open protocol that fills the gap by standardizing how AI agents communicate with one another. A2A is exactly what it sounds like — a common language for agents to send messages, share information, and even delegate tasks amongst themselves. Just as HTTP enabled any web browser to talk to any web server, A2A promises that an agent built on Framework X can request help from an agent built on Framework Y, as long as both speak A2A.

(Image Credit: https://google.github.io/A2A)

What A2A Does: According to Google’s announcement, A2A enables agents to discover each other, communicate securely, and coordinate actions across different services and platforms. In practice, an agent makes itself discoverable by publishing a simple profile (a “public card”) over HTTP describing where it lives, what version it is, and what skills or APIs it offers. Think of this like an agent business card. Another agent can find this card and know how to talk to that agent – its location (URL), capabilities, and supported interaction patterns.

Critically, A2A doesn’t reinvent the wheel but builds on familiar web standards. The protocol leverages HTTP for requests, Server-Sent Events (SSE) for real-time updates, and JSON-RPC for structured calls. This means developers can integrate it into existing IT stacks with relative ease – your agents communicate over the same web technologies your apps already use. A2A supports different communication modes depending on the task: for quick back-and-forth it can use request/response or SSE streaming, and for long-running tasks it allows asynchronous workflows where one agent notifies another upon completion. In essence, it’s giving agents flexible “communication etiquette” suitable for everything from a brief question to an hours-long project hand-off.

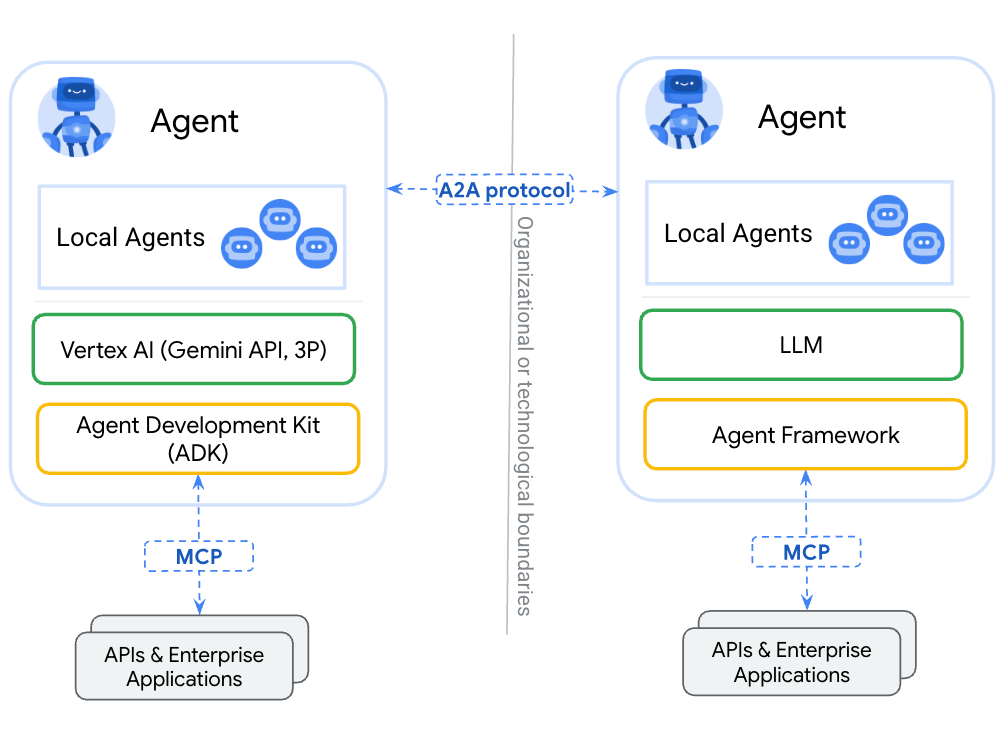

Complements, Not Competes, with MCP: Google was careful to position A2A as complementary to Anthropic’s MCP, not a competing standard. The two protocols address different layers of the agent ecosystem. Recall our paradigm: Model, Context, Protocol. MCP standardizes how an agent (the model) fetches and leverages external tools/data (context). A2A standardizes how agents engage in dialogues and collaborations with each other (protocol). As Google’s blog put it, “A2A is an open protocol that complements Anthropic’s MCP, which provides helpful tools and context to agents”. You can imagine a future AI platform where MCP-enabled tool integrations and A2A-enabled agent conversations work in tandem to achieve complex tasks.

To make this concrete, Google’s documentation gives a great example of an auto repair shop scenario. In the shop, each mechanic is assisted by an AI agent with specialized tools: one agent can control a car lift or wrench via MCP (structured tool APIs). Now, when a customer arrives describing a rattle noise, multiple agents (the “employees”) need to collaborate: the diagnostics agent asks the inspection agent to take a photo of the wheel, then an ordering agent might contact a supplier agent to get a replacement part. All of this agent-to-agent dialogue – receiving the customer’s problem, asking follow-up questions (“How long has it been leaking?”), sharing findings, dividing tasks – is handled by A2A. Meanwhile, whenever an agent needs to use a specific tool (like adjusting the lift or querying inventory), that interaction runs through MCP. In short, MCP is for plugging an agent into its tools, whereas A2A is for plugging agents into each other. Together, they enable a multi-agent workflow greater than the sum of its parts.

Why Interoperability Is Critical (and Inevitable) §

From my perspective as a fintech technology and product architect, the rise of open agent protocols feels reminiscent of the fintech API revolution. In banking, we saw how standard interfaces (think open banking APIs or India’s UPI) unlocked innovation by letting disparate systems work together securely. Before UPI, each bank had its own payment interface – today, any app can transact with any bank through a unified protocol, spurring an explosion of fintech creativity. Agentic AI is at a similar crossroads: without interoperability, we’ll end up with walled gardens of AI agents that can’t leverage each other’s strengths. With interoperability, we enable an “open market” of AI services that can freely mix-and-match to solve problems.

Open standards also future-proof architectures. Enterprise tech history teaches us that proprietary integrations eventually yield to standardized ones – because standards lower the integration cost for everyone. A2A’s design, in fact, was driven by enterprise needs: companies don’t want to be locked into a single vendor’s agent ecosystem. Google launched A2A with support from 50+ tech partners across the industry (from Atlassian and Salesforce to Oracle and Infosys), reflecting a broad consensus that no one company will build all the best agents. Interoperability ensures you can deploy the best-in-class AI agents for each job and have them cooperate seamlessly. As the Google Cloud team put it, A2A gives your agents a “common, open language to collaborate – no matter which framework or vendor they are built on”. This universality is essential for businesses that run diverse applications across cloud environments.

There’s also an open-source ecosystem angle. An open protocol like A2A means that community-built agents and big enterprise agents can speak the same lingo. This breaks down the wall between proprietary enterprise AI and open-source AI projects. A startup or OSS project can focus on a niche agent (say, an expert code-debugger agent) and still plug it into a larger enterprise workflow via A2A. Likewise, an enterprise can benefit from community contributions without heavy custom integration. Open standards tend to create rich ecosystems: we saw this with HTTP (browsers, servers, RESTful APIs) and we’re likely to see it here. In the words of one analysis, protocols don’t just enable communication – they shape who builds what and how fast the ecosystem grows. By defining a clear contract for agent interaction, A2A lowers the barrier to entry for new agents and tools to join the party, whether developed by a tech giant or a lone developer.

A Quick JavaScript Demo: Agents Talking via A2A §

To illustrate how an agent might use A2A in practice, let’s consider a simplified JavaScript example. Suppose we have two agents: OrderAgent (handles orders) and InventoryAgent (manages stock levels). Each agent exposes an HTTP endpoint and a “card” describing its capabilities. Here’s a conceptual snippet demonstrating how one agent could discover another’s card and send a task request:

// Example agent "card" for the InventoryAgent (discovery metadata)

const inventoryAgentCard = {

name: "InventoryAgent",

version: "1.0.0",

endpoint: "https://api.example.com/agents/inventory", // base URL for agent

skills: ["checkStock", "reserveItem", "updateStock"] // capabilities offered

};

console.log("Discovered agent card:", inventoryAgentCard);

// OrderAgent wants to ask InventoryAgent to reserve an item

async function requestAgentTask(agentCard, action, params) {

const url = `${agentCard.endpoint}/${action}`; // e.g. https://.../reserveItem

const res = await fetch(url, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify(params)

});

return res.json(); // parse the JSON result from InventoryAgent

}

// Usage: OrderAgent reserving a laptop through InventoryAgent

(async () => {

const result = await requestAgentTask(inventoryAgentCard, "reserveItem", { itemId: "Laptop-123", quantity: 1 });

console.log("Task result:", result);

})();

In this snippet, the OrderAgent first retrieves the InventoryAgent’s card (which could be via a known registry or URL). The card tells us the InventoryAgent’s endpoint and what actions (skills) it supports. Then requestAgentTask constructs an HTTP POST to the InventoryAgent’s reserveItem action. The response (perhaps a confirmation or error) comes back as JSON.

This is a simplistic view, but it aligns with A2A’s fundamentals: agents expose endpoints for their skills, accept structured requests, and return results in a standard format. In a real A2A scenario, there would likely be authentication, error handling, and possibly asynchronous patterns (e.g. the request might immediately return a task ID and the InventoryAgent later pushes the completion result via callback or webhook). The key point is that with A2A, both agents adhere to a common contract for requests and responses. A developer could swap out either agent with a different implementation (another vendor or an open-source agent) and as long as it speaks A2A, the interaction will still work. This loose coupling is exactly what we want for scalable, modular AI systems.

How Does A2A Compare to Other Agent Frameworks? §

It’s important to distinguish A2A – an interoperability protocol – from the many frameworks that help build AI agents. In recent months we’ve seen a proliferation of agent frameworks and orchestration tools: Microsoft’s AutoGen, the open-source OpenAgents platform, LangChain’s LangGraph, OpenAI’s tools/plug-ins, and more.

These tools often work best within their own ecosystems or within the boundaries of a single application context. A2A, on the other hand, introduces a common communication layer that enables cross-platform, cross-framework agent interaction.

- AutoGen provides a powerful multi-agent conversation framework in Python, but the agents typically operate within a predefined local environment. A2A allows those same agents to interface with external agents or services built using completely different tech stacks.

- LangGraph brings structured orchestration and control over agent workflows. A2A could enhance it by allowing dynamic runtime collaboration with agents outside the graph.

- OpenAgents simplifies deploying specialized agents but lacks built-in multi-agent collaboration support. With A2A, these agents can now communicate and delegate tasks to each other or external services.

In short, these frameworks are great for building agent capabilities and control flows. A2A is what helps those agents participate in a larger ecosystem. It doesn’t replace these tools—it amplifies them by enabling them to speak a shared protocol.

From Fintech Architecture to AI Agents: My Take §

As a technology architect and developer at Eko, where I’ve spent over a decade building low-code, extensible, and framework-driven applications & SaaS platforms for Fintech, we’re now applying similar architectural principles to AI. We have already been actively experimenting with the Model-Context-Protocol (MCP) to bring agentic AI into real-world business workflows.

MCP has helped us decouple tools and services from specific models or agent frameworks. Instead of binding a feature tightly to OpenAI or Anthropic or LangChain, we expose capabilities as reusable context interfaces that any compliant agent can access. This means we can test different LLMs or orchestrators side-by-side without rewriting business logic.

Interoperability protocols like MCP are helping us think beyond single-model capabilities. They’re laying the groundwork for a multi-agent, multi-model infrastructure that’s both future-proof and enterprise-ready.

From this vantage point, the emergence of open agent protocols like A2A and MCP feels very familiar. In fintech, we’ve seen how common protocols unlock ecosystems—UPI and open banking APIs are prime examples. When standards emerge, innovation accelerates. When they don’t, systems remain isolated and integration becomes costly. We’re witnessing a similar tipping point in AI.

Conclusion §

We’re entering a new era where AI agents will become foundational to how software operates and scales. But just like APIs enabled the SaaS explosion, open protocols like A2A and MCP will be essential to unlock agent-driven ecosystems.

These protocols make agents modular, interoperable, and composable—qualities every modern software architecture needs. They also create opportunities for businesses to innovate faster and for developers to build once and integrate everywhere.

If you’re building the future of intelligent systems, now’s the time to embrace standards that ensure your agents don’t just work—they work together.